Large Language Models (LLMs) have revolutionized natural language processing, demonstrating remarkable capabilities across various tasks. However, they face challenges such as hallucinations—generating incorrect or nonsensical information—outdated knowledge, and opaque reasoning processes. To address these issues, Retrieval-Augmented Generation (RAG) has emerged as a promising approach by integrating external knowledge sources into the generation process.

Understanding Retrieval-Augmented Generation (RAG)

RAG enhances LLMs by retrieving relevant information from external databases or knowledge bases during the generation process. This integration allows models to access up-to-date and domain-specific information, thereby improving accuracy and reducing the likelihood of hallucinations. By combining the intrinsic knowledge of LLMs with external data, RAG offers a more robust and credible output, especially for knowledge-intensive tasks.

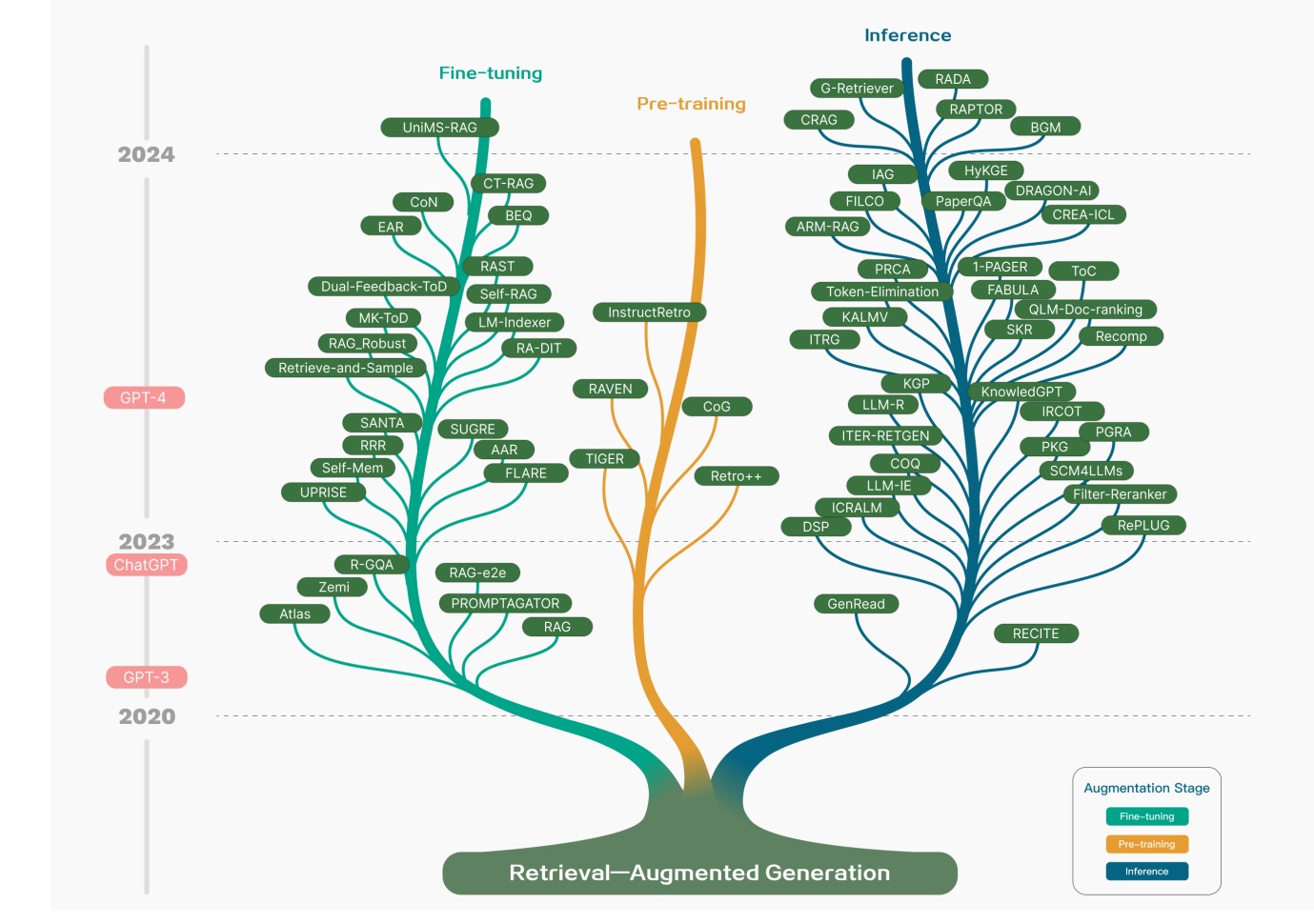

Evolution of RAG Paradigms

The development of RAG can be categorized into three main paradigms:

- Naive RAG: In this initial approach, retrieval mechanisms were directly combined with LLMs without significant optimization or integration strategies. The primary goal was to enhance the model’s knowledge base by incorporating external information.

- Advanced RAG: This paradigm introduced more sophisticated retrieval techniques, such as semantic search and relevance ranking, to ensure that the retrieved information closely aligns with the input query. Advanced RAG also explored better integration methods between retrieval and generation components.

- Modular RAG: The latest paradigm emphasizes a modular design where retrieval and generation components are developed and optimized independently. This approach allows for more flexibility and adaptability, enabling the integration of various retrieval systems and generation models based on specific requirements.

Core Components of RAG Frameworks

A typical RAG system comprises three fundamental components:

- Retriever: Identifies and fetches relevant documents or data from external sources based on the input query. Advanced retrievers utilize semantic similarity measures to enhance the relevance of the retrieved information.

- Generator: Utilizes both the input query and the retrieved information to generate coherent and contextually appropriate responses. The generator ensures that the output aligns with the retrieved data, thereby enhancing factual accuracy.

- Augmentation Techniques: These techniques involve preprocessing and postprocessing steps that refine the input query, retrieved data, or generated output to improve overall performance. Augmentation may include data filtering, re-ranking of retrieved documents, or enhancing the fluency of the generated text.

Evaluation Frameworks and Benchmarks

Evaluating the effectiveness of RAG systems requires comprehensive frameworks that assess various aspects, including accuracy, relevance, and fluency. Recent advancements have introduced benchmarks that simulate real-world scenarios, providing a more robust evaluation of RAG models. These frameworks often employ both automatic metrics and human evaluations to ensure a holistic assessment.

Challenges and Future Directions

Despite the advancements, RAG systems face several challenges:

- Scalability: Handling large-scale external databases efficiently remains a significant concern.

- Real-time Retrieval: Ensuring that the retrieval process is fast enough to support real-time applications is critical.

- Knowledge Integration: Effectively integrating retrieved information into the generation process without compromising coherence and fluency is an ongoing research area.

Future research may focus on:

- Vertical Optimization: Enhancing individual components (retriever, generator) for better performance.

- Horizontal Scalability: Expanding RAG systems to handle diverse domains and languages.

- Ecosystem Development: Building comprehensive ecosystems that facilitate seamless integration of RAG components, fostering collaboration and standardization within the research community.

In conclusion, Retrieval-Augmented Generation represents a significant advancement in addressing the limitations of LLMs by incorporating external knowledge sources. As research progresses, RAG is poised to play a pivotal role in developing more accurate, reliable, and versatile language models.

Reference

[2312.10997] Retrieval-Augmented Generation for Large Language Models: A Survey